California Eyes Landmark AI Regulation

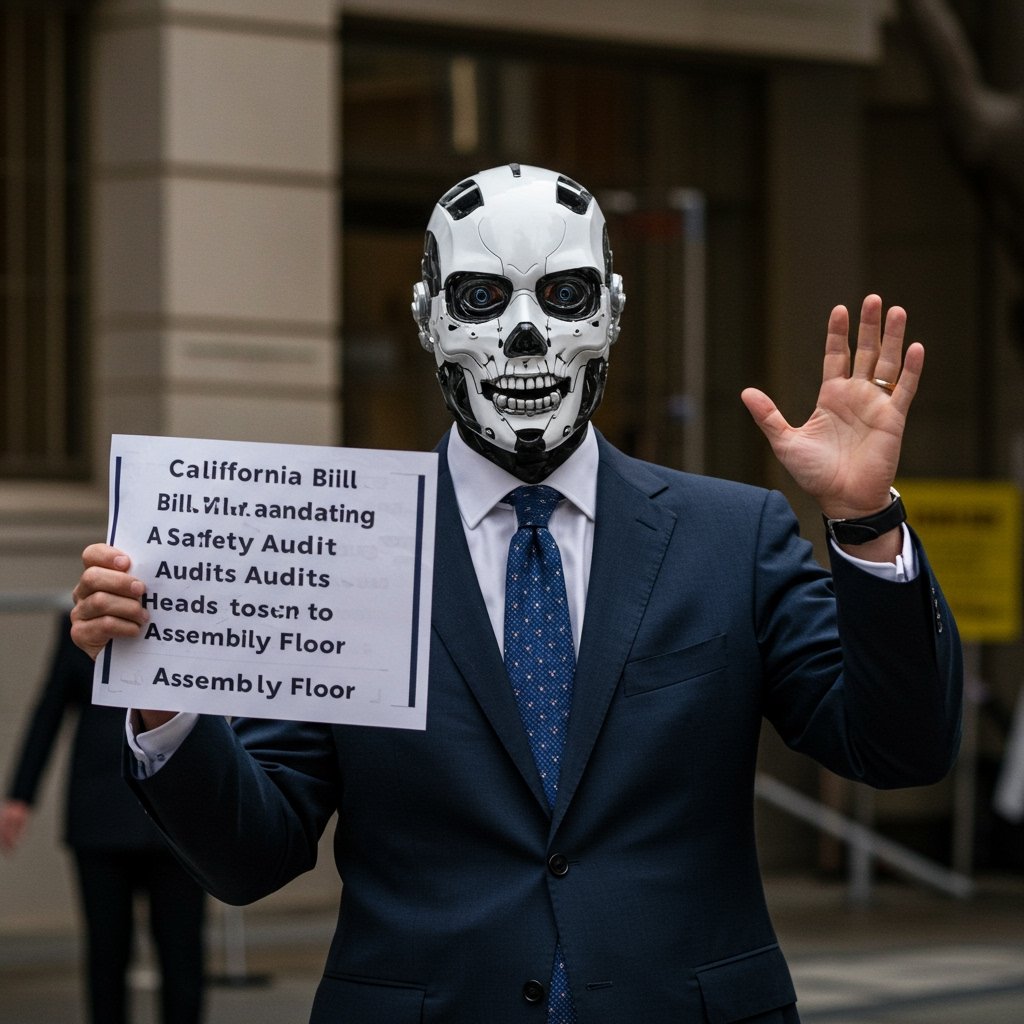

California’s legislative body is on the cusp of a significant decision regarding the future of artificial intelligence governance. The California Assembly is nearing a crucial floor vote on AB 2035, a proposed state bill that represents a pioneering effort to regulate large-scale AI models at the state level. Introduced by Assembly Member David Chen, the legislation aims to implement robust safeguards, primarily through mandated rigorous safety audits and public disclosure requirements for developers of the most powerful AI systems currently being built.

The progression of AB 2035 to the Assembly floor signifies a pivotal moment in the discussion around responsible AI development. Lawmakers are grappling with the potential societal risks posed by increasingly sophisticated AI technologies, including issues related to bias, safety failures, misuse, and accountability. This bill is California’s direct response, seeking to establish a framework that ensures powerful AI models undergo independent evaluation before deployment and that key information about their capabilities and limitations is made available to the public.

Understanding AB 2035: Scope and Requirements

The core of AB 2035 lies in its targeted approach to regulating only the largest and most potentially impactful AI systems. The bill specifically applies to models utilizing over 10,000 petaFLOPS of compute power during training or exceeding 1 trillion parameters. These technical thresholds are intended to capture the frontier models – those requiring immense computational resources and possessing unprecedented scale, often associated with general-purpose AI capabilities. The rationale behind targeting these systems is the belief that their scale and potential impact warrant a higher level of scrutiny and risk management.

Under the proposed law, developers of AI models meeting these criteria would be required to commission independent third-party audits to assess potential risks across various domains, such as safety, bias, and security. The findings of these rigorous safety audits would then, at least in part, be subject to public disclosure. This transparency mechanism is designed to inform policymakers, researchers, and the public about the potential capabilities and hazards of powerful AI models, fostering greater understanding and accountability in the AI ecosystem.

Assembly Member David Chen, the author of the bill, has emphasized the need for proactive measures to address the challenges posed by advanced AI. The legislation reflects a growing consensus among some policymakers that voluntary guidelines from the tech industry may not be sufficient to mitigate the full spectrum of potential risks, necessitating a regulatory approach.

Industry Reacts: Concerns Over Economic Impact and Compliance

While the stated goals of AB 2035 resonate with the broader conversation about responsible AI, the bill has encountered significant resistance from various industry groups. Organizations like TechNet, a national network of technology CEOs and senior executives, and the influential California Chamber of Commerce have voiced substantial concerns regarding the potential consequences of the proposed legislation. Their primary anxieties revolve around the bill’s potential economic impact and the significant compliance burdens it could impose, particularly on West Coast tech firms that are at the forefront of AI innovation.

Critics argue that the mandatory audit and disclosure requirements could be costly, complex, and potentially slow down the pace of innovation. They suggest that overly stringent regulations might put California-based companies at a competitive disadvantage compared to firms in other states or countries with less prescriptive AI policies. The specifics of the audit requirements, the scope of disclosure, and the potential penalties for non-compliance are areas where industry stakeholders have sought clarity and expressed apprehension. There are concerns that the costs associated with rigorous safety audits and establishing processes for public disclosure could divert resources away from research and development, potentially hindering growth in a critical sector of the California economy.

Advocacy and Civil Liberties Perspectives

In contrast to the industry’s concerns, civil liberties organizations have largely expressed support for the foundational principles embodied in AB 2035. Groups representing civil liberties, including the prominent Electronic Frontier Foundation (EFF), view the bill’s framework as a necessary step towards establishing guardrails for powerful AI technologies. They acknowledge the importance of independent safety evaluations and increased transparency as crucial elements in mitigating risks such as algorithmic bias, surveillance capabilities, and other potential harms to individual rights and societal values.

However, while supporting the overall direction of the bill, these organizations have also advocated for amendments to strengthen its effectiveness. Specifically, the EFF and other civil liberties advocates have called for stronger enforcement powers within the legislation. Their argument is that without robust mechanisms to ensure compliance and meaningful consequences for violations, the mandated audits and disclosures could become mere formalities, failing to provide substantive protection against the risks posed by large AI models. They seek assurance that regulatory bodies will have the authority and resources needed to oversee the implementation of the law effectively and hold companies accountable.

Context and Significance

The debate surrounding AB 2035 is unfolding within a broader national and international context of increasing calls for AI regulation. As AI capabilities advance rapidly, governments worldwide are exploring various approaches to govern the technology, ranging from voluntary frameworks to legally binding rules. California, as a global hub for technology and AI development, holds a unique position in this conversation. The state’s legislative actions in this domain are closely watched and have the potential to influence policy discussions in other states and at the federal level.

The bill’s progression through the Assembly is widely seen as a significant step toward state-level AI governance. Should it pass the Assembly floor, it would then move to the California Senate for further consideration. While there are still multiple legislative hurdles to clear before AB 2035 could become law, its current status underscores the seriousness with which California lawmakers are approaching the challenges and opportunities presented by advanced AI.

The Road Ahead: Assembly Floor Vote

The immediate future of AB 2035 rests on the upcoming vote on the California Assembly floor. This vote is a critical juncture in the legislative process. A successful outcome would propel the bill forward, maintaining the momentum toward potentially making California the first state to enact such comprehensive safety and transparency requirements for the most powerful AI models. The debate preceding the vote is expected to highlight the competing interests and perspectives surrounding AI regulation – balancing the need for safety and accountability with the imperative to foster innovation and economic growth within the state’s vital tech sector. All eyes are now on the Assembly as it prepares to cast its vote on this landmark piece of proposed legislation.