California Senate Judiciary Committee Greenlights SB 321, Mandating AI Transparency

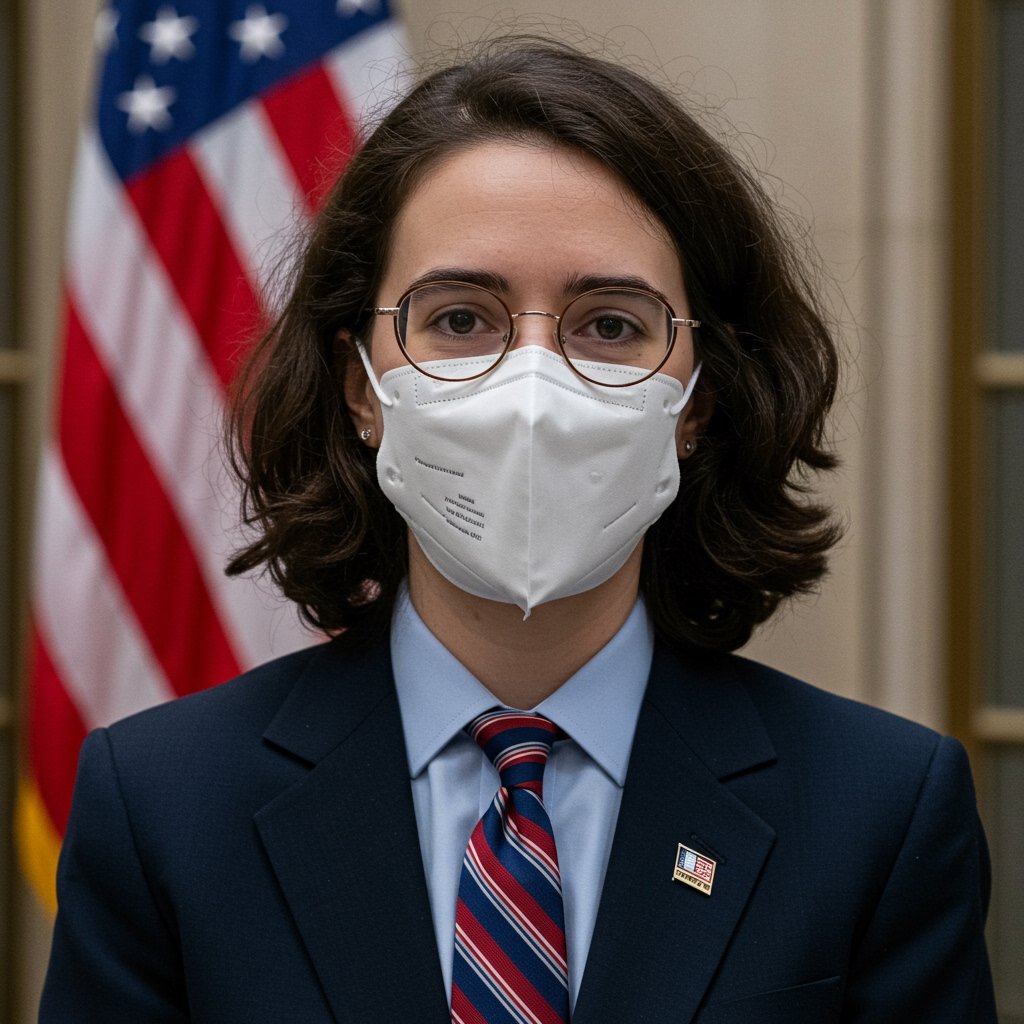

Sacramento, CA – In a pivotal move reflecting California’s proactive stance on regulating burgeoning artificial intelligence technologies, the California Senate Judiciary Committee today approved Senate Bill 321. This significant piece of legislation, authored by Senator Anya Sharma, is designed to establish crucial accountability measures for the companies developing and deploying large language models, a core component of many advanced AI systems.

The passage of SB 321 through the Judiciary Committee marks a critical step in the legislative process, signaling growing momentum within California’s government to address the potential societal impacts of unchecked AI development. The bill now advances to the powerful Senate Appropriations Committee, where its fiscal implications will be scrutinized before it can potentially move closer to a full Senate floor vote.

Key Provisions: Data Origin Disclosure and Algorithmic Bias Audits

At the heart of Senate Bill 321 are two primary requirements aimed at increasing transparency and mitigating risks associated with powerful AI models. First, the bill mandates that tech companies developing large language models must disclose the origin of their training data. This provision seeks to shed light on the vast datasets used to train these models, which can include scraped web content, digitized books, and other diverse sources. Proponents argue that understanding the provenance of training data is essential for identifying potential biases, intellectual property issues, and privacy concerns embedded within the models from their inception.

The second major requirement of SB 321 is the submission of annual algorithmic bias audit reports to the state Attorney General’s office. This measure is intended to hold developers accountable for potential biases that may manifest in how their AI models function. Algorithmic bias can lead to discriminatory outcomes in areas such as hiring, loan applications, criminal justice, and content moderation. By requiring regular audits and reporting to a state oversight body, the bill aims to encourage companies to proactively identify and mitigate these biases, ensuring that AI systems operate more equitably and justly across the diverse Californian population.

Senator Anya Sharma has emphasized that the bill is not intended to stifle innovation but rather to create a framework of responsible development. “As AI becomes increasingly integrated into every facet of our lives, we have a fundamental responsibility to ensure it is developed and deployed safely and fairly,” Senator Sharma stated during the committee hearing. “SB 321 provides necessary guardrails by demanding transparency about training data and requiring companies to actively address algorithmic bias. This is about building public trust and ensuring that the future of AI benefits everyone, not just a select few.”

Navigating Support and Opposition

The legislative journey of SB 321 has been marked by vocal support from privacy advocates and civil liberties groups, as well as significant opposition from segments of the technology industry.

Organizations like the Electronic Frontier Foundation (EFF) have publicly backed the bill, viewing it as a vital step towards greater accountability in the AI sector. Privacy advocates argue that the opaque nature of AI development, particularly concerning the data used for training, poses risks to individual privacy and can perpetuate societal inequalities. They contend that disclosure requirements are necessary for external researchers, regulators, and the public to understand how models are built and where potential harms might originate. The requirement for algorithmic bias audits is also seen as a crucial mechanism for identifying and correcting biases that could lead to discriminatory outcomes in the deployment of AI.

Conversely, industry groups, including TechNet California, have expressed strong reservations about the bill. Their concerns often center on the potential administrative burden and compliance costs associated with the disclosure and auditing requirements. Industry representatives argue that the scope and detail of required disclosures might be overly broad, potentially revealing proprietary information or hindering rapid innovation in a highly competitive global market. They also raise questions about the feasibility and standardization of comprehensive algorithmic bias audits across diverse and rapidly evolving AI models. Opponents suggest that alternative approaches, perhaps focusing on the outcomes of AI deployment rather than internal development processes, might be more effective and less burdensome for companies.

The debate highlights a central tension in AI governance: how to foster innovation and economic growth while simultaneously ensuring public safety, privacy, and equity through effective regulation.

Next Steps and Broader Context

Following its successful passage through the Senate Judiciary Committee, SB 321 now moves to the Senate Appropriations Committee. This stage is critical as the committee will evaluate the bill’s potential fiscal impact on the state government and potentially on regulated entities. Bills with significant financial implications often face intense scrutiny here. If approved by Appropriations, SB 321 would then proceed to a vote by the full California Senate.

The advancement of SB 321 is indicative of a broader trend in California and globally towards establishing regulatory frameworks for artificial intelligence. As the state that is home to many of the world’s leading technology companies, California’s actions in this space are often seen as bellwethers for potential federal or international regulatory approaches. The focus on transparency regarding training data and the explicit requirement for algorithmic bias audits represent a significant step beyond earlier AI policy discussions, moving towards concrete mandates for developers. This legislative action underscores the increasing urgency felt by policymakers to establish clear rules of the road for the rapidly evolving AI sector, aiming to harness its potential benefits while mitigating its substantial risks.